线性梯度算实现octave

感悟

机器学习,感觉就是数值分析等数学课程在计算机上的一个应用。让我想起了理查德.费曼说的“数学之于物理就像做爱之于手淫"那句经典的台词,呵呵。

Octave, scilab,matlab这三种数学工具,编程风格兼容,而前两者是开源,后一是要收费的,对于机器学习来说Octave已经够用,所以还是选择Octave来实现吧。

这里不对机器学习的知识做过多解释,因为有个哥们讲的真是太好了:Andrew Ng。课程讲义等(Handouts and Materials)。

批量线性规划代码

[plain]

##batch_gradient.m

## -*- texinfo -*-

## @deftypefn {Function File} {} [ theta ] = batch_gradient ( x, y)

## Return the parameter of linear founction where y = theta[2:n+1]*x + theta(1).

## where n is the row of matrix x.

## It use batch gradient algorithm obviously.

## For example:

##

## @example

## @group

## x=[1 4;2 5;5 1; 4 2] y = [ 19 26 19 20]

## batch_gradient (x, y)

## @result{} [0.0060406 2.9990063 3.9990063]

## @end group

## @end example

## @seealso{stichastic_gradient}

## @end deftypefn

## Author: xiuleili <xiuleili@XIULEILI>

## Created: 2013-04-26

function [ theta ] = batch_gradient ( x, y)

[n,m]=size(x);

[my,ny]=size(y);

theta = rand(1, m+1);

if(ny ~= n | my!= 1)

error("Error: x should be a matrix with(n,m) and y must be (1,n), where n is the count of training samples.");

end;

one = ones(n,1);

X = [one x]';

learning_rate = 0.01;

error = 1;

threshold = 0.000001;

times = 0;

start_time = clock ();

while error > threshold

theta += learning_rate * (y - theta*X) *X';

error = sum((theta * X - y).^2) / 2;

times += 1;

printf("[%d] the current err is: %f", times, error);

disp(theta);

if(times > 10000000000)

break;

end;

end;

end_time = clock ();

disp( seconds(end_time - start_time));

endfunction

##batch_gradient.m

## -*- texinfo -*-

## @deftypefn {Function File} {} [ theta ] = batch_gradient ( x, y)

## Return the parameter of linear founction where y = theta[2:n+1]*x + theta(1).

## where n is the row of matrix x.

## It use batch gradient algorithm obviously.

## For example:

##

## @example

## @group

## x=[1 4;2 5;5 1; 4 2] y = [ 19 26 19 20]

## batch_gradient (x, y)

## @result{} [0.0060406 2.9990063 3.9990063]

## @end group

## @end example

## @seealso{stichastic_gradient}

## @end deftypefn

## Author: xiuleili <xiuleili@XIULEILI>

## Created: 2013-04-26

function [ theta ] = batch_gradient ( x, y)

[n,m]=size(x);

[my,ny]=size(y);

theta = rand(1, m+1);

if(ny ~= n | my!= 1)

error("Error: x should be a matrix with(n,m) and y must be (1,n), where n is the count of training samples.");

end;

one = ones(n,1);

X = [one x]';

learning_rate = 0.01;

error = 1;

threshold = 0.000001;

times = 0;

start_time = clock ();

while error > threshold

theta += learning_rate * (y - theta*X) *X';

error = sum((theta * X - y).^2) / 2;

times += 1;

printf("[%d] the current err is: %f", times, error);

disp(theta);

if(times > 10000000000)

break;

end;

end;

end_time = clock ();

disp( seconds(end_time - start_time));

endfunction

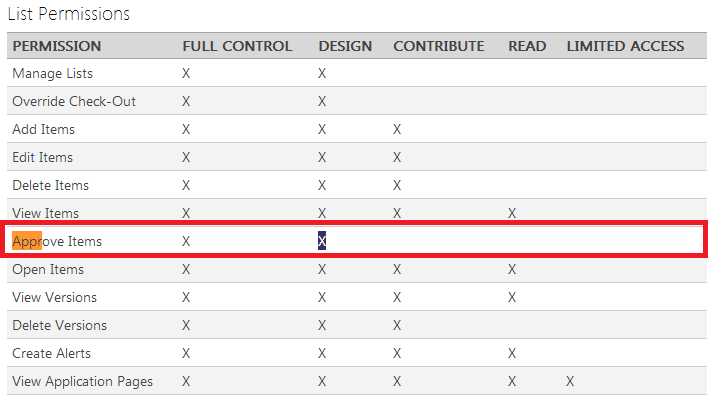

用法如图所示

随机线性梯度源码

[plain]

##stochastic_gradient.m

### -*- texinfo -*-

## @deftypefn {Function File} {} [ theta ] = stochastic_gradient ( x, y)

## Return the parameter of linear founction where y = theta[2:n+1]*x + theta(1).

## where n is the row of matrix x.

## It use stochastic gradient algorithm obviously.

## For example:

##

## @example

## @group

## x=[1 4;2 5;5 1; 4 2] y = [ 19 26 19 20]

## batch_gradient (x, y)

## @result{} [0.0060406 2.9990063 3.9990063]

## @end group

## @end example

## @seealso{batch_gradient}

## @end deftypefn

## Author: xiuleili <xiuleili@XIULEILI>

## Created: 2013-04-26

function [ theta ] = stochastic_gradient (x,y)

[n,m] = size(x);

[my,ny] = size(y);

if ny!=n | my != 1

error("Error: x should be a matrix with(n,m) and y must be (1,n), where n is the count of training samples.");

end

X = [ones(n,1) x]';

theta = rand(1, m+1);

learning_rate = 0.01;

errors = 1;

threshold=0.000001;

times = 0;

start_time = clock ();

while errors > threshold

for k=[1:n]

xx = X(:,k);

theta += learning_rate * (y(k)-theta*xx)*xx';

end

errors = sum((y-theta*X).^2);

times ++;

printf("[%d] errors = %f", times, errors);

disp(theta);

if(times > 10000000000)

break;

end

end

end_time = clock ();

disp( seconds(end_time - start_time));

endfunction

##stochastic_gradient.m

### -*- texinfo -*-

## @deftypefn {Function File} {} [ theta ] = stochastic_gradient ( x, y)

## Return the parameter of linear founction where y = theta[2:n+1]*x + theta(1).

## where n is the row of matrix x.

## It use stochastic gradient algorithm obviously.

## For example:

##

## @example

## @group

## x=[1 4;2 5;5 1; 4 2] y = [ 19 26 19 20]

## batch_gradient (x, y)

## @result{} [0.0060406 2.9990063 3.9990063]

## @end group

## @end example

## @seealso{batch_gradient}

## @end deftypefn

## Author: xiuleili <xiuleili@XIULEILI>

## Created: 2013-04-26

function [ theta ] = stochastic_gradient (x,y)

[n,m] = size(x);

[my,ny] = size(y);

if ny!=n | my != 1

error("Error: x should be a matrix with(n,m) and y must be (1,n), where n is the count of training samples.");

end

X = [ones(n,1) x]';

theta = rand(1, m+1);

learning_rate = 0.01;

errors = 1;

threshold=0.000001;

times = 0;

start_time = clock ();

while errors > threshold

for k=[1:n]

xx = X(:,k);

theta += learning_rate * (y(k)-theta*xx)*xx';

end

errors = sum((y-theta*X).^2);

times ++;

printf("[%d] errors

补充:综合编程 , 其他综合 ,